SSV Protocol & Implementation Deep Dive

The Secret-Shared-Validator(SSV) protocol provides a decentralized infrastructure to distribute validator operation on Ethereum. Deep-dive into the tech!

Secret-Shared-Validator(SSV) is a protocol for distributing the operations of an Ethereum validator between trustless parties using threshold signatures and a BFT (consensus) protocol.

For a quick intro please read this</a >.

This piece includes a deep dive into a full Ethereum, beacon-chain attestation duty process for an SSV node. This represents a single validator SSV instance for attestation duties ONLY with code snippets and reference.

All of the info below is relevant to v0.0.15 of the SSV node.

An SSV node is a piece of software that uses validator shares and network configurations as input, and if executed properly, outputs an active and efficient distributed Ethereum validator. Validator shares and network management can take many shapes and forms, in our case:

1) hard coded shares via config files OR 2) dynamically configure the network via a set of smart contracts.

Option #2 is the more interesting choice, and will likely be more common.

An SSV node is connected to an Ethereum node (known as eth1, or, ‘execution layer’) to fetch events originating from the management smart contracts. The smart contract’s main duty is keeping a registry of operators and a list of validators (who assign shares to operators).

Every time a new operator or a new validator is created (by submitting an on-chain tx) the node picks it up and stores the data locally.

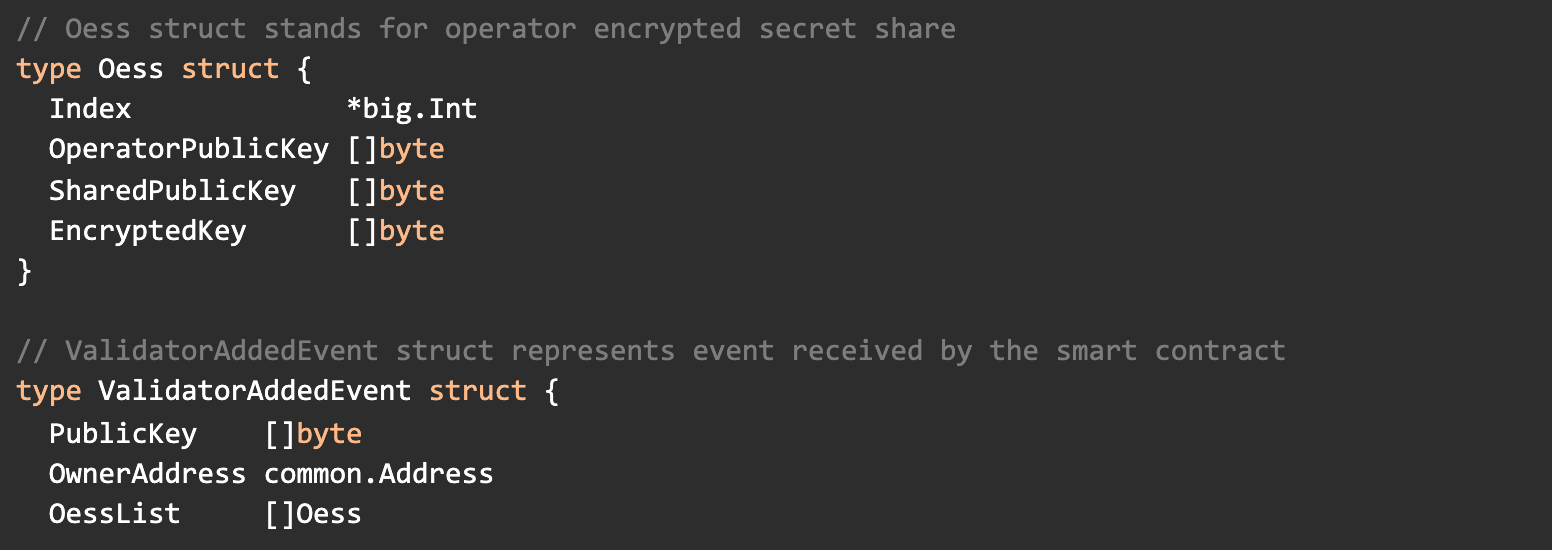

Every SSV operator has a unique ID and a public encryption key used by users to encrypt the operator shares. If an operator is selected to host a network validator it will parse the new encrypted share and store it locally.

The data structs</a > used for the process are the following:

A new found share will also trigger the addition of a new validator management struct</a > — which is responsible (from the moment it’s added), to manage all future duty executions.

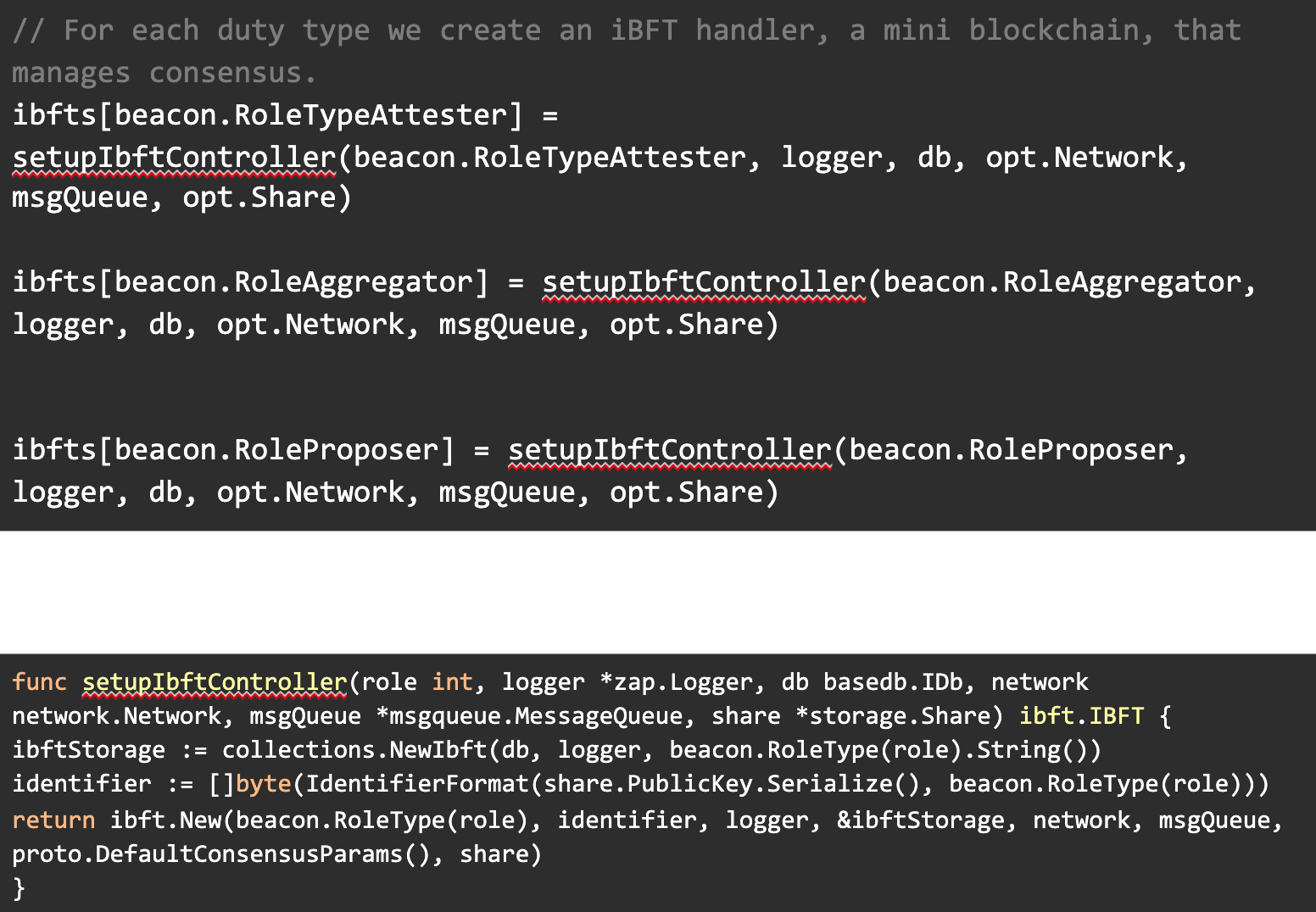

When a new validator struct is initialized, another important</a > piece of code executes the creation of new Istanbul BFT handlers. This process is comparable to ‘mini’ blockchains for each validator and for each duty type.

Each iBFT handler has a unique identifier</a > (lambda) which helps separate them, the identifier is deterministic and constant.

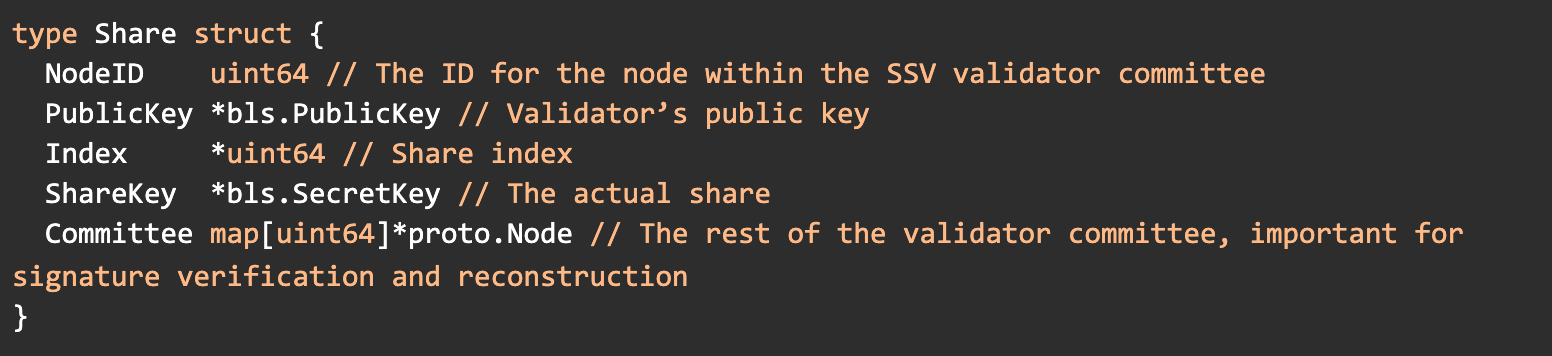

Another important struct to get familiar with is the share</a > structure. This struct holds all the information needed (other than network configuration) for the SSV node to successfully participate and contribute to the network.

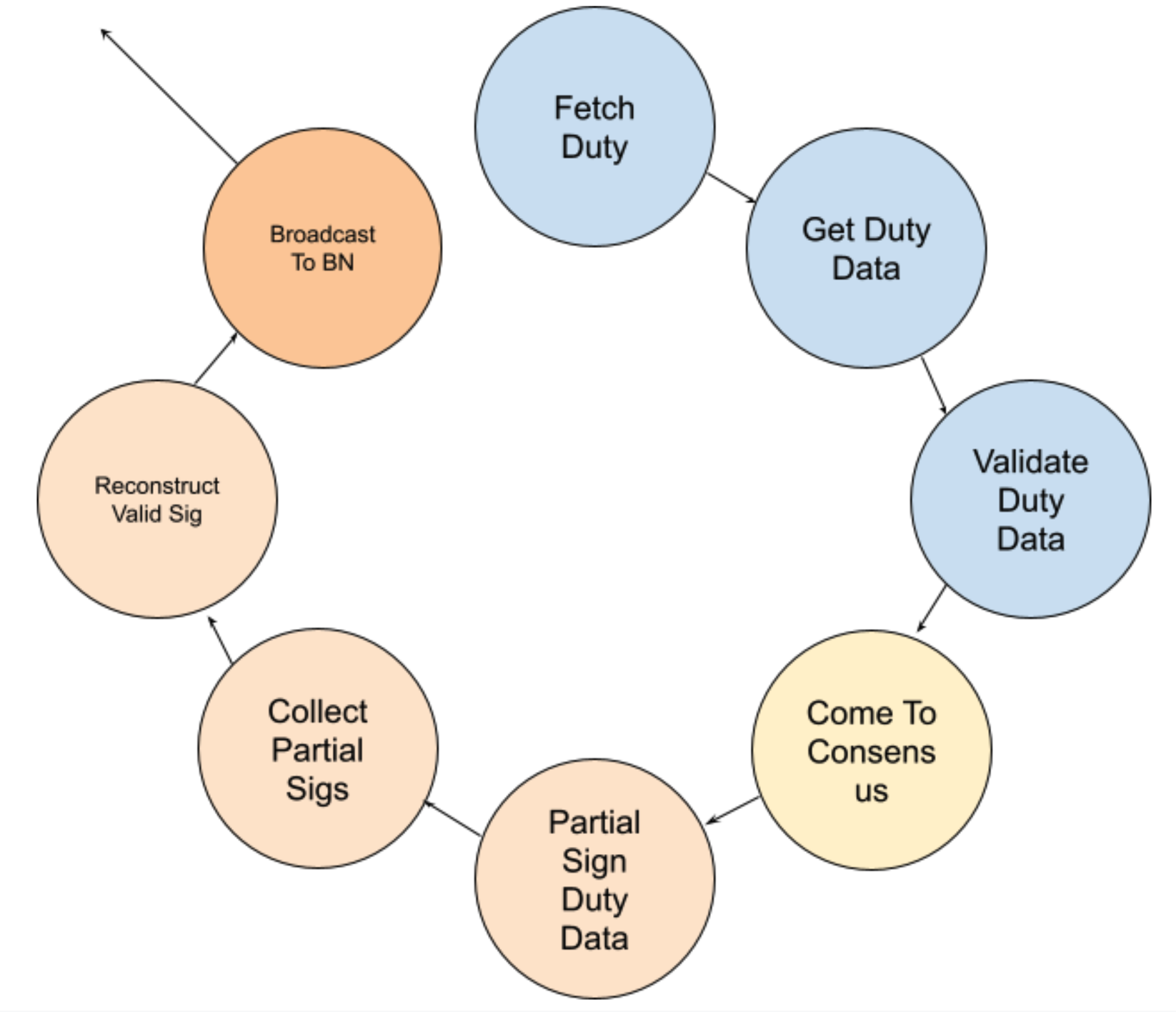

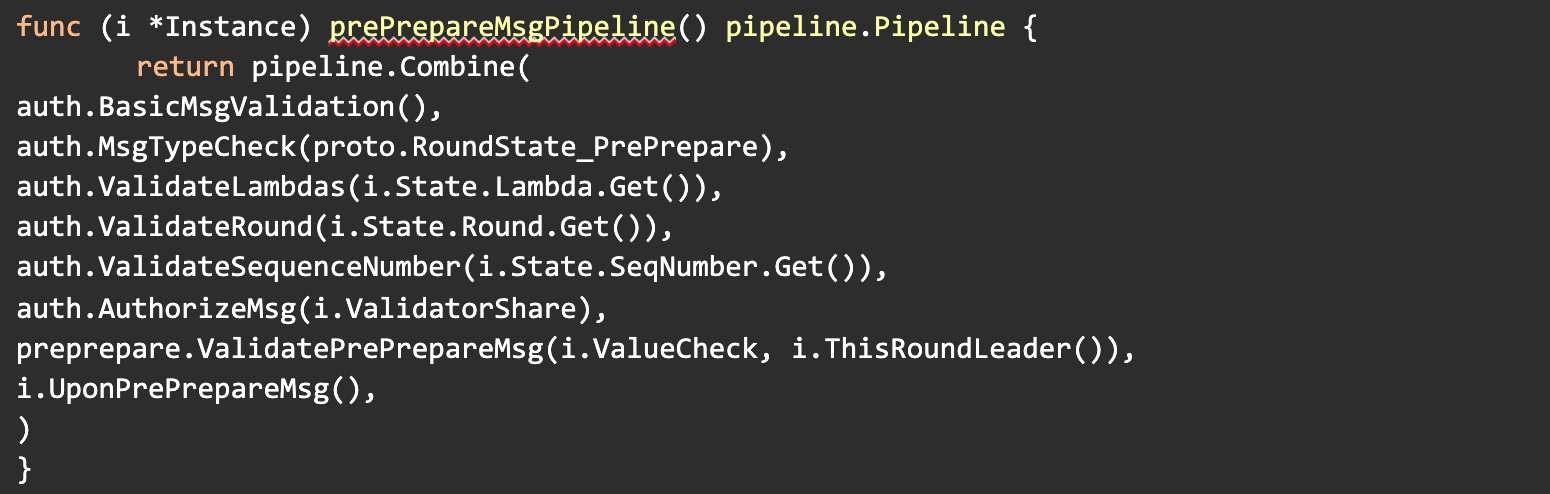

When a validator has a new duty scheduled, it will trigger a pipeline of tasks that will need to be executed correctly in order for a valid signed duty to be broadcasted to the beacon chain network.

The steps marked in blue are grouped together since they are very similar to a ‘normal’ validator client operation. More specifically, scheduling a duty (within a particular slot), fetching the duty data (for an attestation, the attestation data) and validating the duty data.

Validating duty data should involve slashing protection as well, however it’s not yet implemented in the SSV node. The whole slashing protection and signing methods should be refactored completely, which is not yet completed for v0.0.15.

The duty data, in our case the attestation data, is the input for the next step — the consensus step.

Each node fetches the duty locally and will try to propose it to the other committee members to sign. There is no guarantee that the fetched attestation data will be decided on.

The consensus part of the pipeline is the heart of what makes SSV so robust and secure. Our implementation uses the QBFT protocol- a 3 step BFT protocol which finalizes every instance (comparable to blocks).

QBFT also prioritizes safety over liveness which means that if >f nodes are down it might take a while to recuperate.

To try and reduce the effects of the above we’ve implemented a quick sync protocol which is a major improvement.

QBFT has 3 steps (with the 4th one a state) in a happy flow:

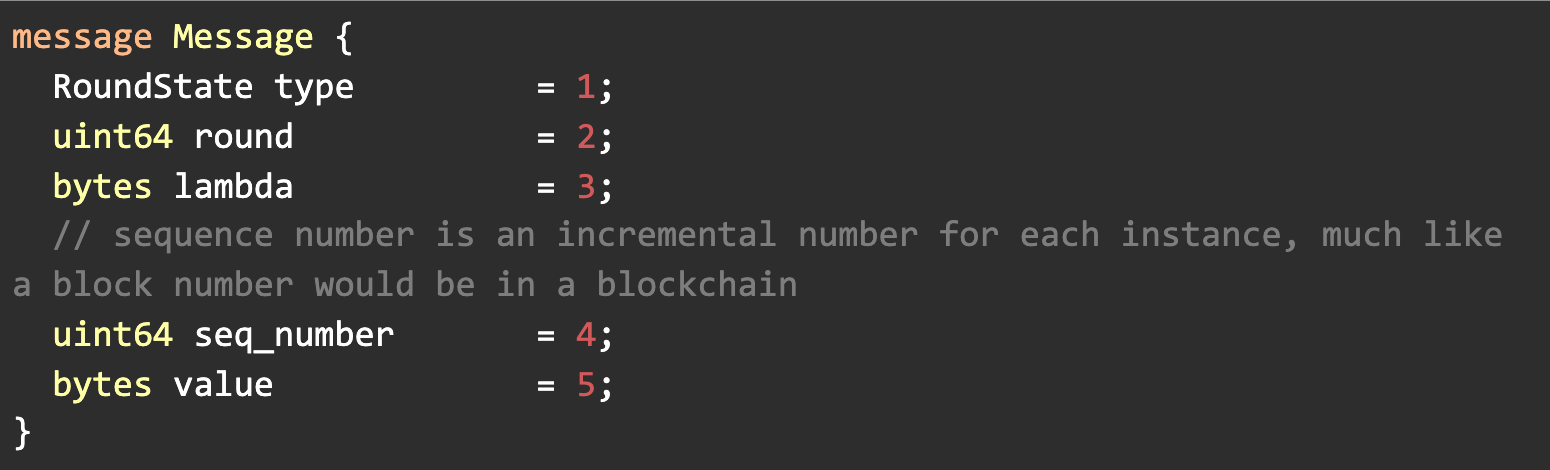

A QBFT message has the below structure:

Instance x+1 can’t start if instance x hasn’t decided. An instance is the execution of a duty.

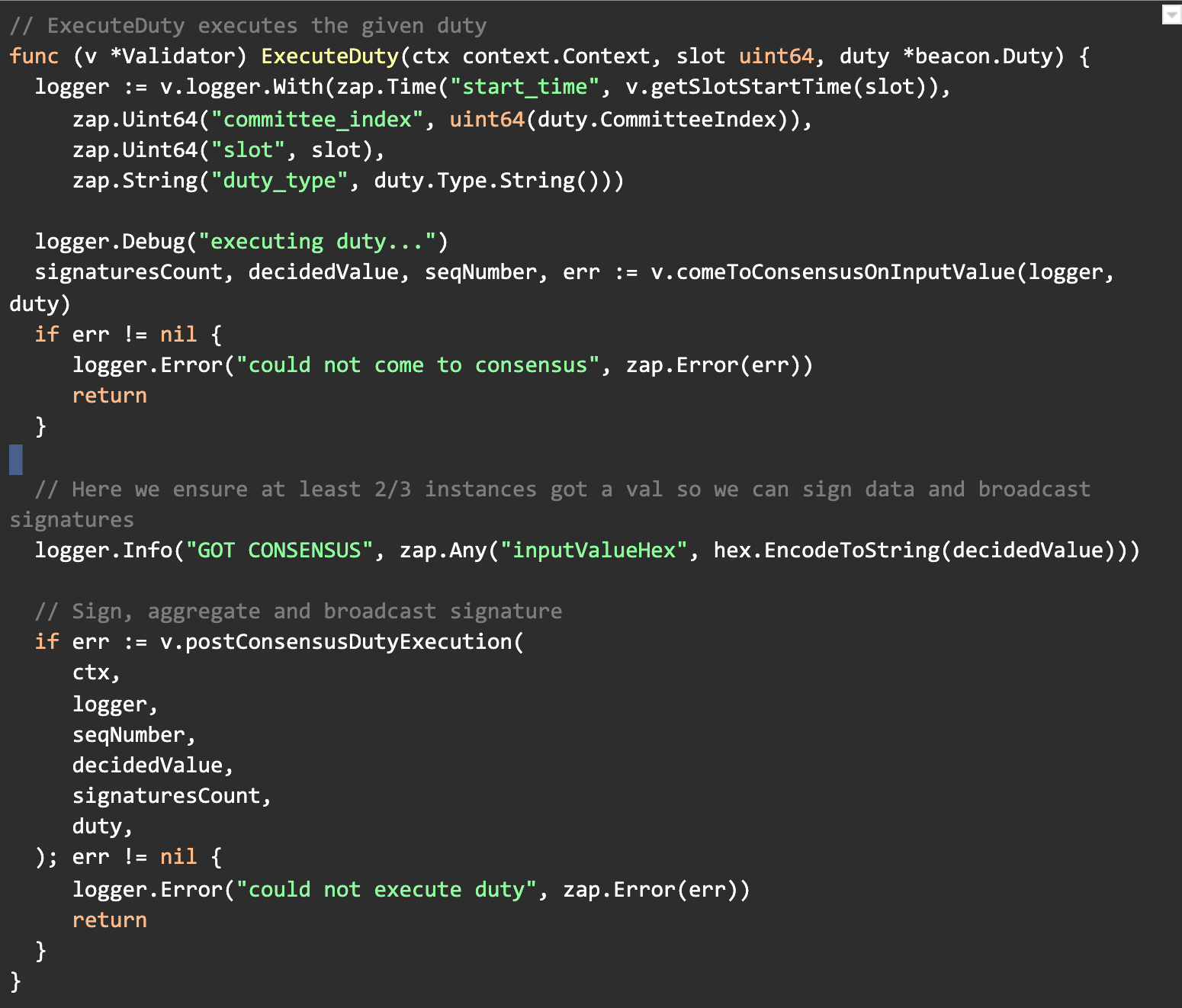

Each QBFT message goes through a pipeline of verification, validation and (upon confirmation) execution steps. This was created with a synchronous architecture in mind to simplify implementation.

The pre-prepare pipeline above takes as input a pre-prepare message, validates it’s content, type, identifier (lambda), round number, sequence number, signature, leader and if all passes, then executes it according to the QBFT protocol.

If the committee can’t decide within the first round, a change round protocol is initiated. It includes exponential timeouts, each time it fails, attempts again until it reaches a consensus.

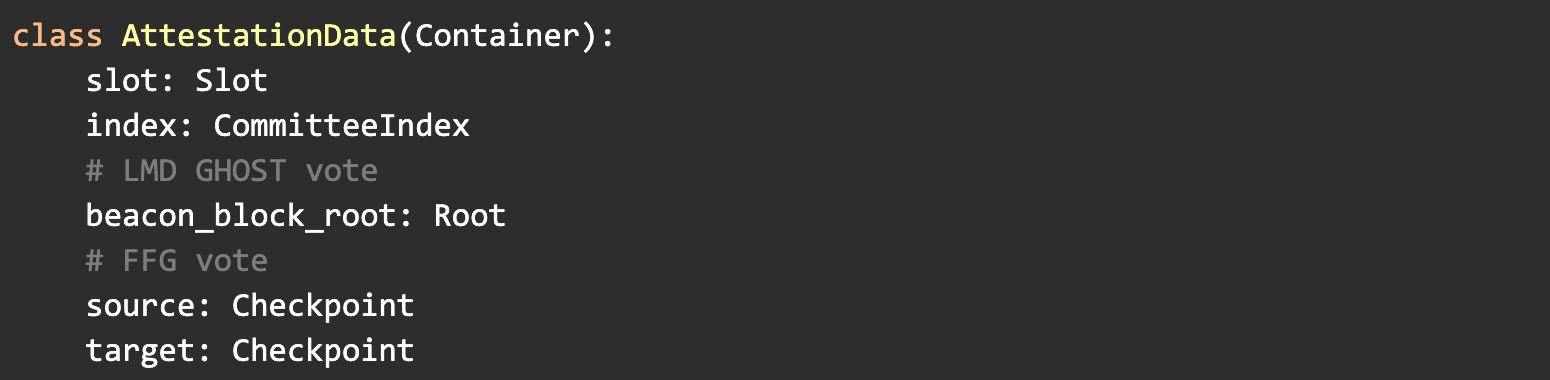

The consensus step returns a decided value which, (should be), a valid attestation data struct similar to the below one (taken from the eth2.0 spec</a >)

The next steps are for signing the attestation data with the share key, resulting in a partial signature which is broadcasted to all the other members. 2f+1 partial signatures are required to reconstruct a valid signature (against the validator’s public key).

Waiting for the other signatures is straightforward, limited only by a timeout.

It’s important to note that this step may fail even if consensus was achieved, for example 2f nodes could drop offline which will result in insufficient collected, partial signatures. If this step fails then the validator will miss an attestation.

Signature reconstruction is using the native Herumi BLS library functions. Behind the scenes it uses Lagrange interpolation to reconstruct a valid signature. I’ve written about it here</a > and Dash research wrote about it here</a >.

The SSV protocol and implementation should be as clear and deterministic as possible, one of the goals the community should have is a formal spec for SSV that can serve for additional implementations and as a reference.